Imagine you are playing a game of Russian Roulette. This game is played, of course, with a revolver:

- There are

players.

- There are

bullets in the cylinder.

- There are

empty chambers in the cylinder (i.e.

chambers total).

At the start of the round, the bullets are loaded in a random order with unknown distribution, and the cylinder is cycled. The players then take turns firing the revolver.

- If the firing pin strikes an empty chamber, the player succeeds in the current turn, and the next player fires the revolver in the next turn.

- When the firing pin strikes a bullet, the game ends with the current player’s loss.

Now, a simple riddle. Assume that you are one of 2 players, and there are 3 bullets + 3 empty chambers. Should you start first or second to have a higher chance of winning?

Now of course, if you’re familiar with this blog, you know we don’t check specific situations. Instead, let’s analyse a simpler variant of this problem first.

two-face’s gamble

Let’s say our good friend Harvey Dent has come to visit the family for the night. And he wants to play a game with us because we play games with others. He sets the rules as follows:

- On every turn, Two-Face flips a biased coin where

denotes the probability of heads.

- If it lands heads, the player is spared, and the turn goes to the next player.

- If it lands tails, the player loses, and the game ends.

- Players take turns in rotation until someone loses.

Notice that this is essentially the same scenario as the first, except with a twist where the cylinder is cycled between every turn (i.e. probability with replacement). This is equivalent to the case where .

In this situation, should I start first or second?

markov chain

In this scenario, we have a Markov Chain where heads corresponds to a transition between 2 players. Tails corresponds to a loss of the current player.

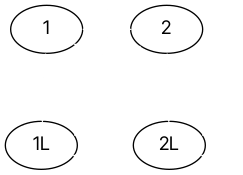

Let’s define our state set with 4 possible states; one for each player, and one for the loss of each player:

And WLOG, we assume Player 1 starts:

Our transition matrix is:

Now, the first thing we want to analyse is the absorption probability of each absorbing state. This means that we are interested in the last 2 components of , where:

From diagonalisation, we obtain the neat result of:

observations

Notice first, that:

This means that Player 2 always stands a better chance at winning. This, of course, makes intuitive sense, since the probability of losing does not change over time, so it’s always more risky for the one starting first.

Notice also that as (probability of heads) increases, Player 1 stands a better chance at winning. This also makes intuitive sense.

results

For :

For neatness, the leading zeros are not included. Player 1 has a 66.7% chance of losing.

russian roulette

Now let’s look at the original scenario. Our Markov Chain used in the variant can no longer work, because the later we are in the game, the higher the chance of firing.

Notice also that by the pigeonhole principle, there is a hard limit on the number of turns. The maximum number of turns that can elapse before someone loses is equal to the number of empty chambers. Once all empty chambers are struck, the next strike will definitely hit a bullet.

markov chain

The simplest way to represent this as a Markov Chain is that, instead of representing each player as a state, we represent each turn as a unique state (on top of the loss states). In total, our state vector will contain elements:

Below is the state diagram for :

Since the transition matrix for this problem is usually very sparse, I will not be writing it down. However, in general, it will have the canonical form:

where:

contains transition probabilities between transient states.

contains transition probabilities from transient to absorbing states.

contains self-transition probabilities for absorbing states.

Once we have a sparse representation for , we next need to find the absorption probabilities. By expansion:

where the geometric series . It is relatively easy to obtain the above expression.

In the limit where , all transience would have already decayed away (i.e.

), so we have:

observations

Now this is very interesting. The matrix has appeared before in my earlier post Probability Theory with Linear Algebra, as the expected time starting from a given state to remain in another given state. Why does it appear here, where we’re trying to find the probability of being absorbed?

That’s because is a very handy matrix in Markov Chains, functioning as a dual of the transition matrix, representing time instead of probabilities. It is aptly named the Fundamental Matrix. It reveals to us a neat physical interpretation of

:

-

is the expected time in state

, starting from state

.

is the probability of being absorbed into state

from state

.

is hence the probability of being absorbed into state

, starting from state

, accounting for the contributions of all possible intermediate states.

results

For :

For neatness, the leading zeros are not included. Player 1 has a 65% chance of losing, slightly lower than the case with replacement (as expected).